Development of sound event annotating tool with incorporated few-shot and active learning – MSc thesis project

The use of long-term autonomous recorders has been steadily increasing over the past few decades in the field of bioacoustics. This means that wildlife researchers and bioacousticians are collecting thousands of hours of recordings, after which they manually extract sound events of their interest. Although the development and use of machine learning algorithms for automatic sound event extraction and analysis are promising, these generally only work well on large well-defined curated datasets with previously identified sounds events that can be used for training and validating. To make this available to the dataset at hand, the candidate in this project will design and prototype a modern annotation platform for audio and bioacoustics research that can couple an intuitive labelling UI with state-of-the-art machine learning. The tool will provide waveform/spectrogram views, rapid keyboard/mouse labelling, and flexible schema management, facilitating few-shot learners to “cold-start” models from just a handful of examples per class. An active-learning loop will continuously surface the most informative or uncertain clips for review, maximizing annotation efficiency and model quality with minimal human effort. Emphasis will be placed on the development of a tool which can be used for wildlife monitoring and/or environmental soundscape analysis. The outcome will be a usable, extensible open-source tool, and a thesis evaluating gains in labelling speed/accuracy versus conventional workflows.

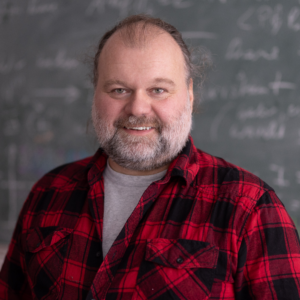

Peter Balazs

Peter Balazs