4th Inaugural Lecture on AI, Neuroscience & Explainability

The 4th IT:U Inaugural Lecture brought together leading scholars, industry leaders, and the general public to explore the intersection of AI, neuroscience, and explainability. Founding Professors Nina Hubig and Jie Mei led the discussion, engaging attendees both online and in person.

Explainable AI in High-Stakes Decisions – Prof. Nina Hubig

Prof. Nina Hubig explored how AI can improve decision-making in healthcare, mental health, and patient communication—but only if people can trust its reasoning. She emphasized that AI needs to provide clear, understandable explanations, a key principle in the EU AI Act, ensuring AI systems can justify their decisions, particularly in high-risk fields like medicine and law. Real-world applications include:

- AI in Medicine: The Example of Cardiology – AI predicts intervention success based on patient data, but without explanation, both the doctor and patient hesitate. Prof. Hubig studies how explainability can help cardiologists interpret high-risk predictions while ensuring patient-centered communication.

- Mental Health & AI – AI assesses mental health through speech and facial analysis yet lacks human-like empathy. Her research explores how integrating empathy into AI can improve trust and patient adherence to treatment.

- AI-Assisted Patient Communication & Consent – AI explains medical procedures in telehealth, but balancing legal accuracy with empathetic dialogue remains a challenge. Hubig investigates how AI can enhance patient communication while ensuring clarity and respect.

- Neuroscience and Explainable AI (NeuroXAI) – Enhancing AI to better understand brain function, particularly in neurodevelopment and autism spectrum disorders. This direction closely aligns with Prof. Jie Mei’s research, fostering joint collaboration opportunities.

“AI acts as a reflection of society, mirroring our values, biases, and complexities. Ultimately, the challenge is not just about understanding or explaining AI, but about deepening our understanding of ourselves—and who we aspire to be.”

— Nina Hubig, Assistant Professor for Explainable Artificial Intelligence

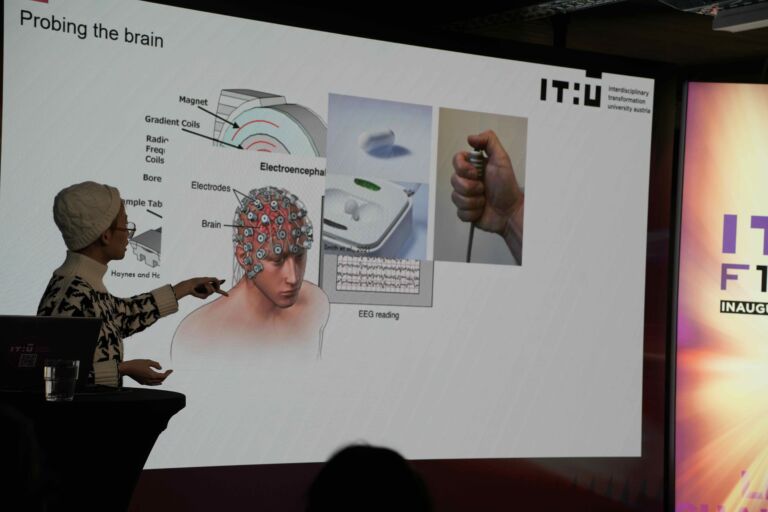

Using AI to Understand the Brain – Prof. Jie Mei

Prof. Jie Mei’s research bridges AI and neuroscience, using AI to enhance brain research and applying neuroscience principles to improve AI models. Prof. Mei highlighted that neuroscience remains an evolving field, filled with uncertainties that parallel the challenges in AI research. Her work contributes to advancements in both fields, with applications such as:

- AI for Early Detection of Neurodegenerative Diseases – AI models can potentially enable earlier detection of Parkinson’s disease through olfactory changes. They also help identify brain regions affected by the progression of Parkinson’s disease.

- Simulating Brain Pathology with Artificial Neural Networks (ANNs) – ANNs can help model perceptual and cognitive processes in neurodevelopment and neurodegeneration, offering insights into brain disorders.

- Neuro-Inspired AI for Smarter Technology – This research focuses on improving the robustness and efficiency of models, leading to more efficient learning agents, such as robots and adaptive AI systems. While highly theoretical, this research offers valuable insights for the future of AI development.

“Neuroscience is a young field, named only about 60 years ago, and it is still searching for a unifying theory. For me, its beauty lies not only in the brain’s complexity but also in the many layers of uncertainty we face—the uncertainty of what we don’t know, the uncertainty of which parts of our knowledge may be wrong, and the uncertainty of which approaches are better for studying the brain.”

— Jie Mei, Assistant Professor for Computational Neuroscience

Innovation Through Interdisciplinarity

At IT:U, interdisciplinary research is a driving force behind innovation. By combining AI, neuroscience, and medicine, it bridges technology, human health, and real-world impact. Cross-disciplinary collaboration at IT:U fosters breakthroughs that would not be possible within traditional academic boundaries.

“The inaugural lectures by Nina Hubig and Jie Mei mark a milestone in interdisciplinary research at the intersection of artificial intelligence (AI), neuroscience, and medicine. They impressively demonstrated how explainable AI can help us better understand the brain’s complex processes and gain new scientific insights. In particular, AI has the potential to revolutionize the early detection and treatment of neurodegenerative diseases such as Parkinson’s. At the same time, the close collaboration between these disciplines opens up new possibilities for more precise diagnoses and personalized therapies—a crucial advancement that can only be achieved with transparent and comprehensible AI decision-making processes.”

– Stefanie Lindstaedt, IT:U Founding President

Our Next Inaugural Lectures

Our Inaugural Lecture Series continues with insightful discussions on social norms, digital transformation, and interdisciplinary collaboration, featuring experts in game theory, human rights, and learning sciences.

📅 2 April 2025: “An Interdisciplinary Approach to Social Norms and Practices: Theory, Work, and Rules” – Christian Hilbe (Professor of Game Theory and Evolutionary Dynamics), and Ben Wagner (Professor of Human Rights and Technology).

📅29 April 2025: “(Inter-)Professional Transformations: Why Process Matters” – Sebastian Maximilian Dennerlein, Assistant Professor for Digital Transformation in Learning.